Support vector machines (SVMs)

Perceptron and Margins

Let dataset \(D=\{(x_1, y_1), \ldots, (x_n,y_n)\}\) be linearly separable with \(\gamma\)-margin where \(x_i \in \mathbb{R}^d\) and \(y_i \in \{-1, 1\}\).

Let \(w* \in \mathbb{R}^d\) be the weight vector s.t. \((w^{*T}x_i)y_i\ge\gamma\) \(\forall i\).

Let some \(R>0 \in \mathbb{R}\), s.t. \(\forall i\) \(||x_i||\le R\).

Therefore, the number of mistakes made by the algorithm is given by, \[ \text{\#mistakes} \le \frac{R^2}{\gamma^2} \]

Observations

Let \(w_{perc}\) be any weight vector which can linearly separate the dataset.

Therefore, we observe the following:

- “Quality” of the solution depends on the margin.

- Number of mistakes depend on \(w^*\)’s margin.

- \(w_{perc}\) need not necessarily be \(w^*\).

Hence, our goal should be to find the solution that maximizes the margin.

Margin Maximization

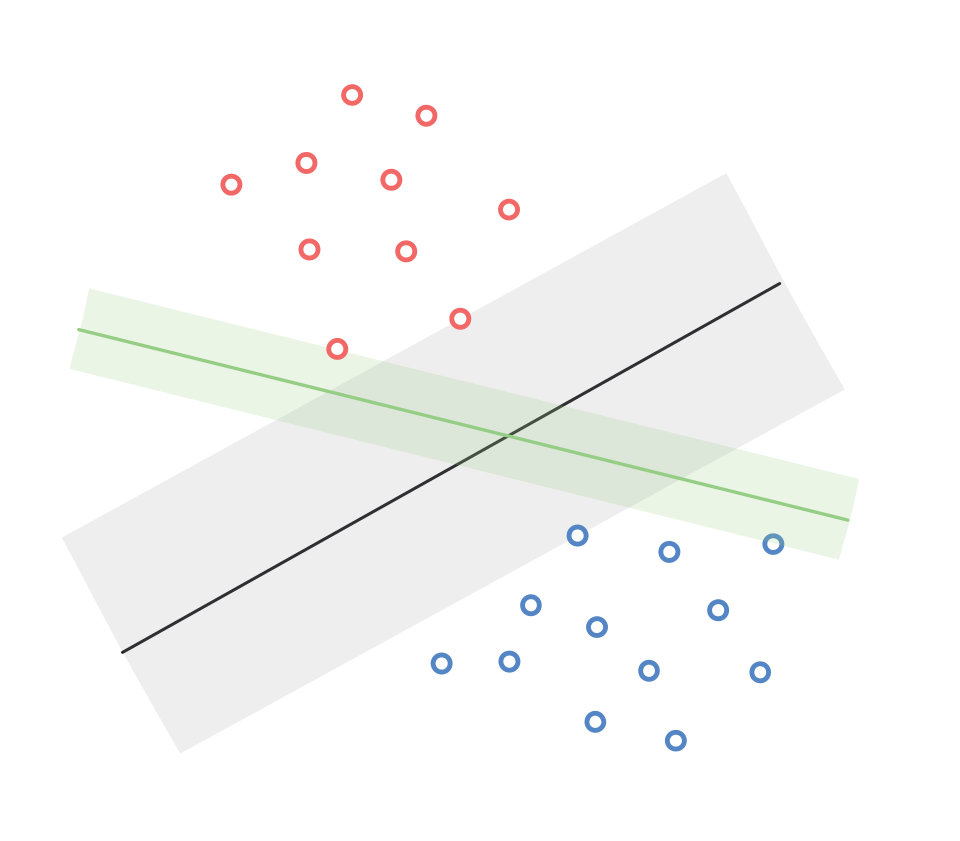

From the previous analysis, it is clear that a single dataset could have multiple linear classifiers with varying margins. The following diagram illustrates this phenomenon,

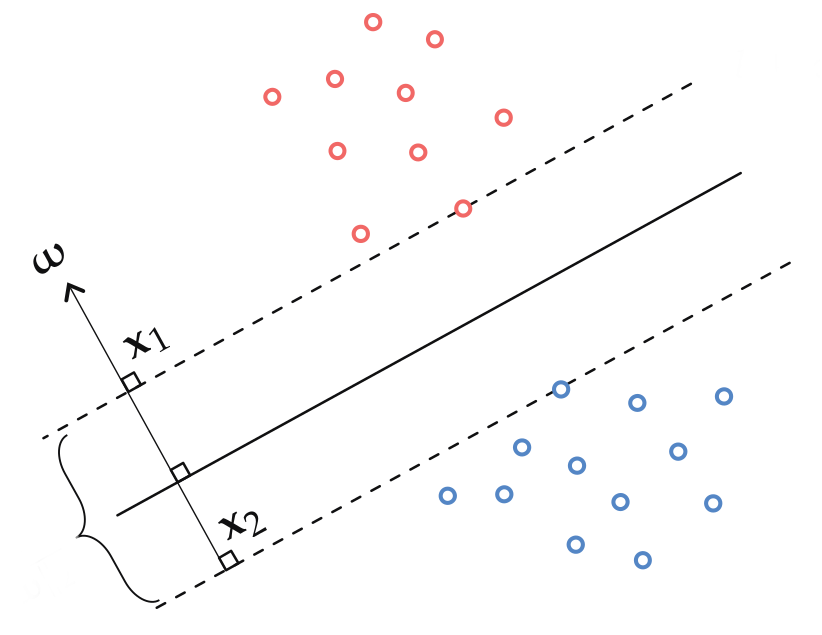

Therefore, for getting the best classifier, our goal can be written as, \[ \max_{w,\gamma} \gamma \] \[\begin{align*} s.t. (w^Tx_i)y_i &\ge \gamma \hspace{1em} \forall i \\ ||w||^2 &= 1\ \end{align*}\]\end{align*} The boundary of the margin is given by, \[\begin{align*} \{x:(w^Tx_i)y_i &= \gamma\}\\ \{x:(\frac{w}{\gamma}^Tx_i)y_i &= 1\}\\ \end{align*}\] From the above equation, we can see that \(\gamma\) depends on the width of \(w\). Therefore, we reformulate our goal as, \[ \max_{w} \text{width}(w) \] \[\begin{align*} s.t. (w^Tx_i)y_i &\ge 1 \hspace{1em} \forall i \\ \end{align*}\] Let the width be the distance between the two parallel margins, and let \(x\) and \(z\) be two points who are on the two lines exactly opposite to each other s.t. \(w^Tx=-1\) and \(w^Tz=1\) or vice versa.

Let \(x_1\) and \(x_2\) be two points which lie on opposite side of the decision boundary as well as on the margins.

Therefore, the width is given by, \[\begin{align*} ||x_1^Tw - x_2^Tw||_2^2 &= 2 \\ ||x_1-x_2||_2^2||w||^2_2 &= 2\\ \therefore ||x_1 - x_2||^2_2 &= \frac{2}{||w||^2_2} \end{align*}\]

Therefore, our objective function can be written as, \[ \max_{w} \frac{2}{||w||^2_2} \hspace{1em} s.t. (w^Tx_i)y_i \ge 1 \hspace{1em} \forall i \] Equivalently, \[ \min_{w} \frac{1}{2}||w||^2_2 \hspace{1em} s.t. (w^Tx_i)y_i \ge 1 \hspace{1em} \forall i \] Therefore finding the separating hyperplane with maximum margin is equivalent to finding the one with the smallest possible normal vector \(w\).

Constrained Optimization

Let a constrained optimization problem be formulated as follows, \[\begin{align*} \min_w f(w) \\ s.t. g(w) \le 0 \\ \end{align*}\] We can solve this problem using Lagrange Multipliers.

Lagrange multipliers are used in constrained optimization problems to find the optimal values of the objective function subject to a set of constraints. In Lagrange multipliers method, the constraints are incorporated into the objective function by introducing additional variables called Lagrange multipliers.

The Lagrange function \(\mathcal{L}(x, \alpha)\), for our above function, is defined as follows: \[ \mathcal{L}(w, \alpha) = f(w) + \alpha g(w) \hspace{1em} \forall w \] where \(\alpha \ge 0\).

Therefore, maximizing the Lagrange function w.r.t. \(\alpha\), \[\begin{align*} \max_{\alpha\ge0} \mathcal{L}(w, \alpha) &= \max_{\alpha\ge0} f(w) + \alpha g(w) \\ &= \begin{cases} \infty \hspace{2em} \text{if} \hspace{1em} g(w) > 0 \\ f(w) \hspace{1em} \text{if} \hspace{1em} g(w) \le 0 \end{cases} \end{align*}\] As the Lagrange function is equal to \(f(w)\) where \(g(w) \le 0\), we can rewrite our original function as, \[\begin{align*} \min_w f(w) &= \min_w \left [ \max_{\alpha\ge0} \mathcal{L}(w, \alpha) \right ] \\ &= \min_w \left [ \max_{\alpha\ge0} f(w) + \alpha g(w) \right ] \\ \end{align*}\] In general, we cannot swap the min and max functions unless all the functions involved are convex functions. Hence, as both \(f\) and \(g\) are convex functions in our example, we can rewrite them as follows, \[ \min_w \left [ \max_{\alpha\ge0} f(w) + \alpha g(w) \right ] \equiv \max_{\alpha\ge0} \left [ \min_w f(w) + \alpha g(w) \right ] \] Let’s rewrite the above constrained optimization problem with \(m\) constraints \(g_i(w) \le 0\) where \(i \in [1, m]\). This can written as, \[\begin{align*} \min_w f(w) &\equiv \min_w \left [ \max_{\alpha\ge0} f(w) + \sum _{i=1} ^m \alpha _i g_i(w) \right ] \\ &\equiv \max_{\alpha\ge0} \left [ \min_w f(w) + \sum _{i=1} ^m \alpha _i g_i(w) \right ] \\ \end{align*}\]

Formulating the Dual Problem

Our Objective function is as follows, \[ \min_{w} \frac{1}{2}||w||^2_2 \quad s.t. (w^Tx_i)y_i \ge 1 \quad \forall i \] The constraints can be written as follows, \[\begin{align*} (w^Tx_i)y_i &\ge 1 \quad \forall i \\ 1 - (w^Tx_i)y_i &\le 0 \quad \forall i \\ \end{align*}\] Let \(\alpha \in \mathbb{R}^d\) be the Lagrange multipliers, and let our Lagrange function be written as, \[\begin{align*} \mathcal{L}(w, \alpha) &= \frac{1}{2}||w||^2_2 + \sum _{i=1} ^n \alpha _i (1 - (w^Tx_i)y_i) \\ \min_w \max_{\alpha\ge0} \left [ \frac{1}{2}||w||^2_2 + \sum _{i=1} ^n \alpha _i (1 - (w^Tx_i)y_i) \right ] &\equiv \max_{\alpha\ge0} \min_w \left [ \frac{1}{2}||w||^2_2 + \sum _{i=1} ^n \alpha _i (1 - (w^Tx_i)y_i) \right ] \end{align*}\] Solving for the inner function of the dual problem, we get, \[\begin{align*} w_{\alpha}^* - \sum _{i=1} ^n \alpha _i x_i y_i = 0 \\ \therefore w_{\alpha}^* = \sum _{i=1} ^n \alpha _i x_i y_i \end{align*}\] Rewriting the above equation in vectorized form, we get, \[ w_{\alpha}^* = XY\alpha \quad \ldots[1] \] where \(X \in \mathbb{R}^{d \times n}\), \(Y \in \mathbb{R}^{n \times n}\), and \(\alpha \in \mathbb{R}^{n}\). \(X\) is the dataset, \(Y\) is the label diagonal matrix, where the diagonals are the labels. Rewriting the outer dual function, we get, \[\begin{align*} &\max_{\alpha\ge0} \left[ \frac{1}{2}||w||^2_2 + \sum _{i=1} ^n \alpha _i (1 - (w^Tx_i)y_i) \right] \\ &= \max_{\alpha\ge0} \left[ \frac{1}{2}w^Tw + \sum _{i=1} ^n \alpha_i - w^TXY\alpha \right] \\ &= \max_{\alpha\ge0} \left[ \frac{1}{2}(XY\alpha)^TXY\alpha + \sum _{i=1} ^n \alpha_i - (XY\alpha)^TXY\alpha \right] \quad \ldots\text{from }[1] \\ &= \max_{\alpha\ge0} \left[ \frac{1}{2}\alpha^TY^TX^TXY\alpha + \sum _{i=1} ^n \alpha_i - \alpha^TY^TX^TXY\alpha \right] \\ &= \max_{\alpha\ge0} \left[\sum _{i=1} ^n \alpha_i - \frac{1}{2}\alpha^TY^TX^TXY\alpha \right] \\ \end{align*}\]

Observations:

- As the dual problem solves for \(\alpha \ge 0\), its variable dimension is in \(\mathbb{R}^n_+\), while as the primal problem solves for \(w\), its variable dimension is in \(\mathbb{R}^d\).

- Solving the dual problem is “easier”.

- As the dual problem depends on \(X^TX\), it can be kernalized.

Some observations regarding the following equation, \[ w_{\alpha}^* = \sum _{i=1} ^n \alpha _i x_i y_i \]

- The optimal \(w^*\) is the linear combination of the datapoints where the importance of each datapoint is given by \(\alpha_i\) for the \(i^{th}\) point.

- Hence, there are points that are more important that others.

Support Vector Machine

Revisiting the Lagrangian function, \[ \min_w \left [ \max_{\alpha\ge0} f(w) + \alpha g(w) \right ] \equiv \max_{\alpha\ge0} \left [ \min_w f(w) + \alpha g(w) \right ] \] The primal function is represented on the left-hand side of the equation, while the right-hand side represents the dual function. \(w^∗\) and \(\alpha^*\) are the solutions derived for the primal and dual functions, respectively. When these solutions are inserted into the equation, we obtain, \[ \max_{\alpha\ge0} f(w^*) + \alpha g(w^*) = \min_w f(w) + \alpha^* g(w) \] But as \(g(w^*) \le 0\), the left hand side equates to \(f(w^*)\). \[ f(w^*) = \min_w f(w) + \alpha^* g(w) \] Substituting \(w^*\) for \(w\) in the right-hand side of the equation would result in a new right-hand side that is greater than or equal to the current one. \[ f(w^*) \le f(w^*) + \alpha^* g(w^*) \] \[ \therefore \alpha^* g(w^*) \ge 0 \quad \ldots [1] \] But, according to our constraints, \(\alpha^* \ge 0\) and \(g(w^*)\le0\). \[ \therefore \alpha^* g(w^*) \le 0 \quad \ldots [2] \] From \([1]\) and \([2]\), we get, \[ \alpha^* g(w^*) = 0 \] Rewriting the equation for multiple constraints, we get, \[ \alpha_i^* g(w^*_i) = 0 \quad \forall i \] Therefore, if one of the two is greater than zero, the other equals zero. We know that \(g(w^*) = 1-(w^Tx_i)y_i\). \[ \alpha_i^* (1-(w^Tx_i)y_i) = 0 \quad \forall i \] As the importance of the \(i^{th}\) datapoint is given by \(\alpha_i\), if \(\alpha_i > 0\), we get, \[ (w^Tx_i)y_i = 1 \] which means that the \(i^{th}\) datapoint lies on the “Supporting” hyperplane and contributes to \(w^*\).

Therefore, the datapoints whose \(\alpha_i > 0\) are known as Support Vectors and this algorithm is known as Support Vector Machine.

Support Vector Machines (SVMs) are a type of supervised learning algorithm used for classification and regression analysis. SVMs aim to find the optimal hyperplane that separates data points from different classes with the maximum margin. In the case of non-linearly separable data, SVMs use kernel functions to transform the data into a higher-dimensional space, where a linear decision boundary can be used to separate the data.

Insight: \(w^*\) is a sparse linear combination of the data points.

Hard-Margin SVM Algorithm

This algorithm only works if the dataset is linearly separable with a \(\gamma > 0\).

- Calculate \(Q=X^TX\) directly or using a kernel as per the dataset.

- Use the gradient of the dual formula (\(\alpha^T1 - \frac{1}{2}\alpha^TY^TQY\alpha\)), in the gradient descent algorithm to find a satisfactory \(\alpha\). Let the intial \(\alpha\) be a zero vector \(\in \mathbb{R}^n_+\).

- To predict:

- For non-kernelized SVM: \(\text{label}(x_{test}) = w^Tx_{test} = \sum _{i=1} ^n \alpha _i y_i(x_i^Tx_{test})\)

- For kernelized SVM: \(\text{label}(x_{test}) = w^T\phi(x_{test}) = \sum _{i=1} ^n \alpha _i y_ik(x_i^Tx_{test})\)

Soft-Margin SVM

Soft-Margin SVM is an extension of the standard SVM algorithm that allows for some misclassifications in the training data. This is useful when the data is not linearly separable, as it allows the SVM to still find a decision boundary that separates the classes as best as possible while allowing for some errors. The degree to which misclassifications are allowed is controlled by a regularization parameter(\(C\)), which is used to balance the trade-off between maximizing the margin and minimizing the number of misclassifications.

The primal formulation for this is given by, \[ \min_{w, \epsilon} \frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i \quad s.t. \quad (w^Tx_i)y_i + \epsilon_i \ge 1;\quad \epsilon_i \ge 0\quad \forall i \] where \(C\) is the hyperparameter that is used to balance the trade-off between maximizing the margin and minimizing the number of misclassifications, and \(\epsilon_i\) is the additional value required to satisy the constraints.

Dual Formulation for Soft-Margin SVM

The primal formulation for Soft-Margin SVM is given by, \[ \min_{w, \epsilon} \frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i \quad s.t. \quad (w^Tx_i)y_i + \epsilon_i \ge 1;\quad \epsilon_i \ge 0\quad \forall i \] where \(C\) is the hyperparameter that is used to balance the trade-off between maximizing the margin and minimizing the number of misclassifications, and \(\epsilon_i\) is the additional value required to satisy the constraints.

The Lagrangian function \(\mathcal{L}(w, \epsilon, \alpha, \beta)\) for our above function is defined as follows: \[ \mathcal{L}(w, \epsilon, \alpha, \beta) = \frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-(w^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n \beta(-\epsilon_i) \] where \(\alpha_i \ge 0\) and \(\beta_i \ge 0\) \(\forall i\).

Dual Formulation

Maximizing the Lagrangian function w.r.t. \(\alpha\) and \(\beta\), and minimizing it w.r.t. \(w\) and \(\epsilon\), we get,

\[ \min _{w, \epsilon}\left [\max _{\alpha \ge 0; \beta \ge 0}\frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-(w^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n \beta(-\epsilon_i) \right ] \]

The dual of this is given by,

\[ \max _{\alpha \ge 0; \beta \ge 0}\left [\min _{w, \epsilon}\frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-(w^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n \beta(-\epsilon_i) \right ] \]

\[ \max _{\alpha \ge 0; \beta \ge 0}\left [\min _{w, \epsilon}\mathcal{L}(w, \epsilon, \alpha, \beta) \right ] \quad \ldots[1] \]

Differentiating the above function\([1]\) w.r.t. \(w\) while fixing \(\alpha\) and \(\beta\), we get, \[ \frac{d\mathcal{L}}{dw} = 0 \] \[ \frac{d}{dw} \frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-(w^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n \beta(-\epsilon_i) = 0\\ \] \[ w_{\alpha, \beta}^* - \alpha_ix_iy_i = 0 \] \[ \therefore w_{\alpha, \beta}^* = \alpha_ix_iy_i \quad \ldots [2] \]

Differentiating the above function\([1]\) w.r.t. \(\epsilon_i \forall i\) while fixing \(\alpha\) and \(\beta\), we get,

\[ \frac{\partial\mathcal{L}}{\partial\epsilon_i} = 0 \] \[ \frac{\partial}{\partial\epsilon_i} \frac{1}{2}||w||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-(w^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n \beta(-\epsilon_i) = 0 \] \[ C - \alpha_i -\beta_i = 0 \] \[ \therefore C = \alpha_i + \beta_i \quad \ldots [3] \]

Substituting the values of \(w\) and \(\beta\) from \([2]\) and \([3]\) in \([1]\), we get,

\[ \max _{\alpha \ge 0; \beta \ge 0; C = \alpha_i + \beta_i}\left [\frac{1}{2}||\alpha_ix_iy_i||^2_2 + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i(1-((\alpha_ix_iy_i)^Tx_i)y_i - \epsilon_i) + \sum _{i=1} ^n (C-\alpha_i)(-\epsilon_i) \right ] \] \[ \max _{\alpha \ge 0; \beta \ge 0; C = \alpha_i + \beta_i}\left [\frac{1}{2}\alpha_i^Tx_i^Ty_i^Ty_ix_i\alpha_i + C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i-\alpha_i^Tx_i^Ty_i^Ty_ix_i\alpha_i - \sum _{i=1} ^n \alpha_i\epsilon_i - C\sum _{i=1} ^n\epsilon_i + \sum _{i=1} ^n \alpha_i\epsilon_i \right ] \] \[ \max _{\alpha \ge 0; \beta \ge 0; C = \alpha_i + \beta_i}\left [\sum _{i=1} ^n \alpha_i - \frac{1}{2}\alpha_i^Tx_i^Ty_i^Ty_ix_i\alpha_i\right ] \] \[ \therefore \max _{0 \le \alpha \le C}\left [\sum _{i=1} ^n \alpha_i - \frac{1}{2}\alpha_i^Tx_i^Ty_i^Ty_ix_i\alpha_i\right ] \] If \(C=0\), \(\alpha^*=0\) \(\rightarrow\) \(\displaystyle w^*=\sum _{i=1} ^n \alpha^*_i x_i y_i = 0\).

if \(C=\infty\), the equation will be equal to that of Hard-Margin SVM.

Complementary Slackness in Soft-Margin Support Vector Machines

Complementary slackness can be expressed through a set of equations as follows:

\(\forall i\), the following equation holds: \[ \alpha_i(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i - \epsilon^*_i) = 0 \quad \ldots [1] \]

Additionally, the following relation must hold: \[ \beta_i(\epsilon_i^*) = 0 \quad \ldots [2] \]

Given these equations, various scenarios arise which elucidate the implications of complementary slackness in the context of Soft-Margin SVM.

Scenarios of Complementary Slackness

If \(\alpha_i^* = 0\):

In this case, \(\beta_i^* = C\) due to \(\alpha_i^* + \beta_i^* = C\). From equation [2], we deduce that \(\epsilon_i^* = 0\). Consequently, it follows that the decision function \((\mathbf{w}^{*T}\mathbf{x}_i)y_i + \epsilon_i^* \ge 1\) simplifies to \((\mathbf{w}^{*T}\mathbf{x}_i)y_i \ge 1\), indicating that the data point is correctly classified and lies outside the margin.If \(0 < \alpha_i^* < C\):

In this scenario, both \(\alpha_i^*\) and \(\beta_i^*\) assume positive values within the range \((0, C)\). Equation [2] implies \(\epsilon_i^* = 0\), while equation [1] gives \(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i - \epsilon^*_i = 0\), leading to \((\mathbf{w}^{*T}\mathbf{x}_i)y_i = 1\). This signifies that the data point resides exactly on the margin boundary.If \(\alpha_i^* = C\):

When \(\alpha_i^*\) takes on the maximum value \(C\), the dual variable \(\beta_i^*\) becomes \(0\). Equation [2] establishes that \(\epsilon_i^*\ge0\). Equation [1] implies \(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i - \epsilon^*_i = 0\), which further simplifies to \(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i = \epsilon^*_i\). This results in \(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i \ge 0\), indicating that the data point is misclassified or lies within the margin.If \((\mathbf{w}^{*T}\mathbf{x}_i)y_i < 1\):

Given that \((\mathbf{w}^{*T}\mathbf{x}_i)y_i < 1\), the inequality \((\mathbf{w}^{*T}\mathbf{x}_i)y_i + \epsilon_i^* \ge 1\) holds. By manipulating this inequality, we deduce \(\epsilon_i^* > 0\). From equation [2], it follows that \(\beta_i^* = 0\), and consequently, \(\alpha_i^* = C\).If \((\mathbf{w}^{*T}\mathbf{x}_i)y_i = 1\):

For data points that satisfy \((\mathbf{w}^{*T}\mathbf{x}_i)y_i = 1\), we once again consider the inequality \((\mathbf{w}^{*T}\mathbf{x}_i)y_i + \epsilon_i^* \ge 1\), leading to \(\epsilon_i^* \ge 0\). If \(\epsilon_i^* > 0\), equation [2] indicates \(\beta_i^* = 0\), resulting in \(\alpha_i^* = C\). On the other hand, if \(\epsilon_i^* = 0\), equation [2] implies \(\beta_i^* \ge 0\), and as a consequence, \(\alpha_i^* \in [0, C]\).If \((\mathbf{w}^{*T}\mathbf{x}_i)y_i > 1\):

When \((\mathbf{w}^{*T}\mathbf{x}_i)y_i > 1\), the inequality \(1 - \mathbf{w}^{*T}\mathbf{x}_i y_i - \epsilon^*_i < 0\) holds. From equation [1], we conclude that \(\alpha_i^* = 0\), leading to \(\beta^*_i = C\) as per the equation \(\alpha_i^* + \beta_i^* = C\). Furthermore, equation [2] reveals that \(\epsilon_i^* = 0\).

Summary of Scenarios

In summary, we have established the following relationships between the dual variable \(\alpha_i^*\) and the decision boundary defined by \((\mathbf{w}^{*T}\mathbf{x}_i)y_i\):

- \(\alpha_i^* = 0\): Data point is correctly classified and outside the margin.

- \(0 < \alpha_i^* < C\): Data point lies exactly on the margin boundary.

- \(\alpha_i^* = C\): Data point is misclassified or inside the margin.

- \((\mathbf{w}^{*T}\mathbf{x}_i)y_i < 1\): Dual variable \(\alpha_i^* = C\).

- \((\mathbf{w}^{*T}\mathbf{x}_i)y_i = 1\): Dual variable \(\alpha_i^* \in [0, C]\) if \(\epsilon_i^* = 0\), else \(\alpha_i^* = C\).

- \((\mathbf{w}^{*T}\mathbf{x}_i)y_i > 1\): Dual variable \(\alpha_i^* = 0\).